Entropy, Chemistry, and the Thermodynamic Potentials

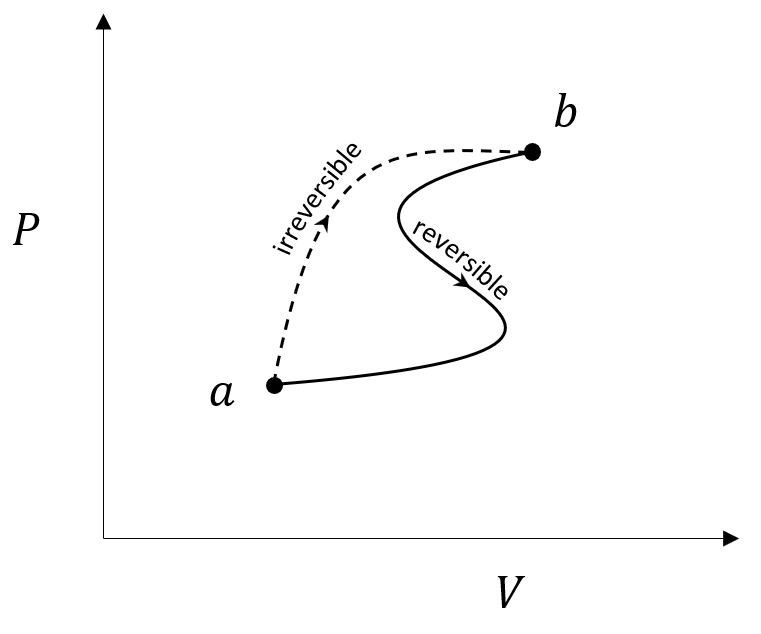

The example of supercooled water from last month’s was instructive both from the perspective of the usefulness of state variables but also from the point-of-view understanding irreversible processes. However, the effectiveness of the approach was blunted by the fact that the supercooled drop in spontaneously freezing actually had a drop in entropy and not an increase as we would expect from the Clausius inequality. In order to argue that the ‘sudden freeze’ mentioned in the problem statement was truly irreversible, we had to calculate the change in the entropy of the surroundings and confirm that the total entropy change of the universe (drop and surroundings) was greater than zero: $\Delta S_{uni} > 0$.

The need to look at both the system in question and the surroundings is awkward and inconvenient, especially for the chemist. Perhaps, no text expresses the distaste for using entropy change directly as sharply and succinctly as Physical Chemistry by J. Edmund White, in which the author has a bolded heading in Chapter 6 which reads “Entropy is not a satisfactory criterion of spontaneity”.

White argues in that section, that “the practicing chemist needs way to predict…whether a reaction is spontaneous”. He first notes that originally, scientists thought that if changes in internal energy or enthalpy were negative ($\Delta U_{S,V} \leq 0$ or $\Delta H_{S,P}$ in his notation) these would be good indicators of spontaneous reactions. He then offers the counterexample of ice at $21 {}^{\circ}C$ spontaneously melting even though the change in either internal energy of enthalpy is clearly positive.

He then goes on to also reject entropy for the reasons discussed above. In disposing of entropy he remarks that “[a] more useful criterion would depend only on the process or system and would apply under the usual conditions for chemical reactions: constant $T$ and $P$ or constant $T$ and $V$.”

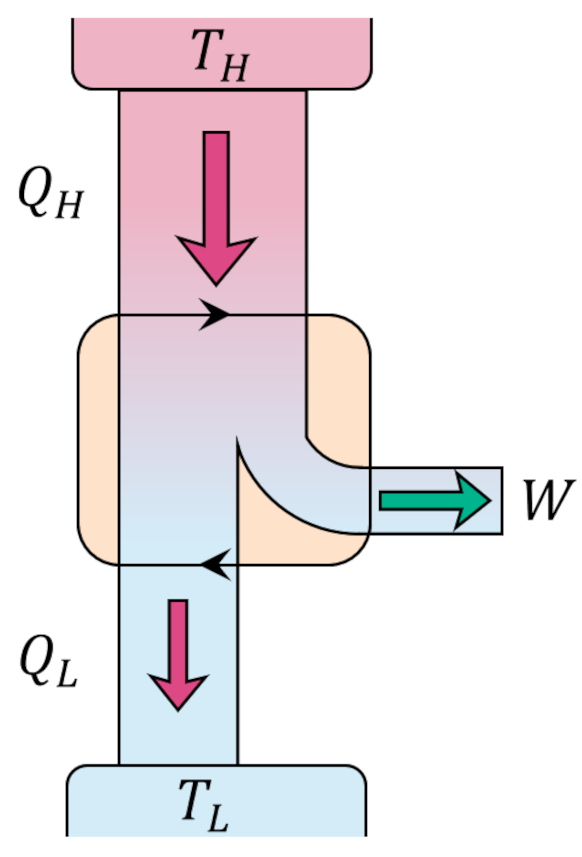

At this point White introduces the two thermodynamic potentials: the Gibbs free energy $G = U – TS – PV$ and the Helmholtz free energy $F = E – TS$ and off he goes. To understand why, remember that spontaneous processes occur when

\[ \Delta S_{uni} = \Delta S_{sys} + \Delta S_{sur} \geq 0 \; .\]

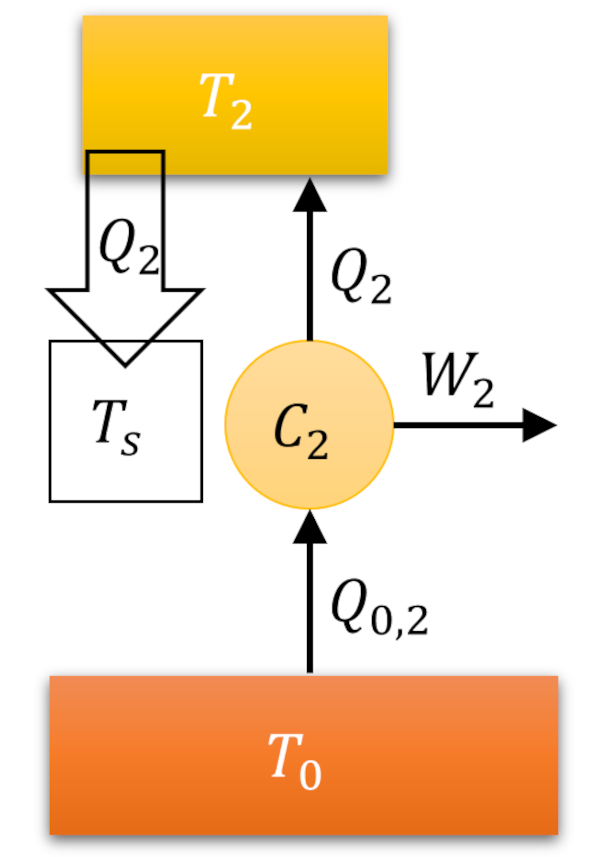

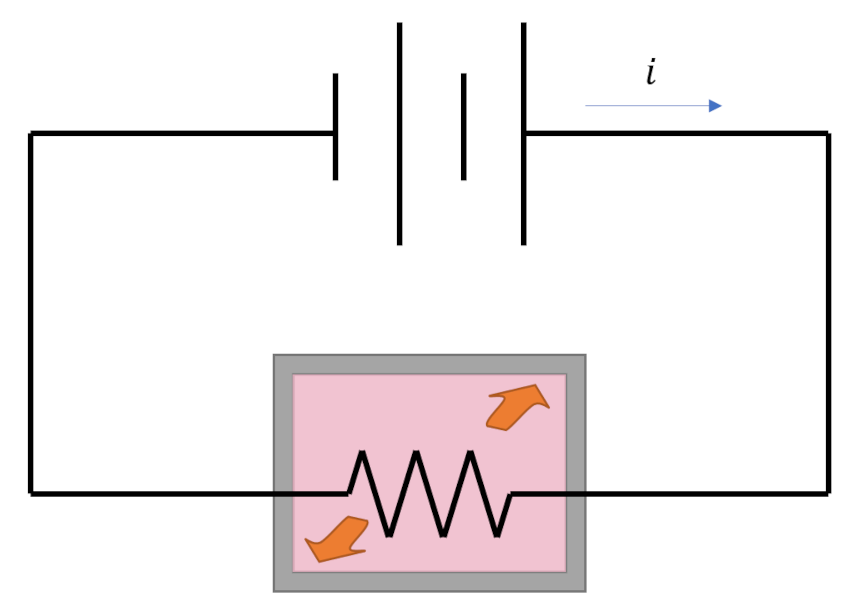

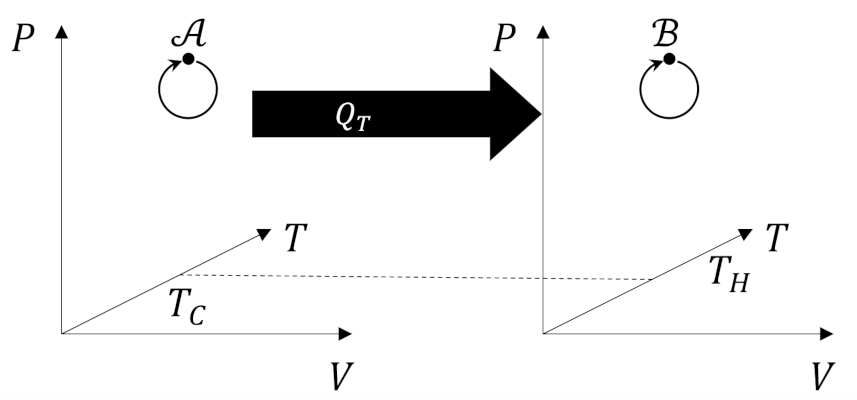

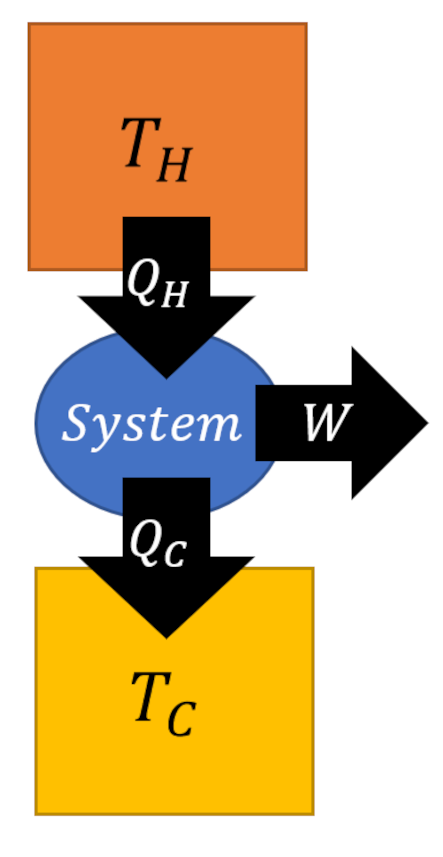

In its interaction with the system, the surroundings either absorbs or supplies some heat $Q_{sur}$ to the system such that $Q_{sys} = – Q_{sur}$. If the surroundings constitute a reservoir such that $T$ is constant (i.e., a heat bath) the change in entropy of the surroundings can be expressed as

\[ \Delta S_{sur} = \frac{Q_{sur}}{T} = – \frac{Q_{sys}}{T} \; .\]

With this result, we can now write the total change in the entropy of the universe without referencing anything more than the system (even the temperature, since constant, is measured in the system):

\[ \Delta S_{uni} = \Delta S_{sys} – \frac{Q_{sys}}{T} \; .\]

At this point, we’ve eliminated any need to refer to the surroundings and we can drop the ‘sys’ subscript in everything that follows.

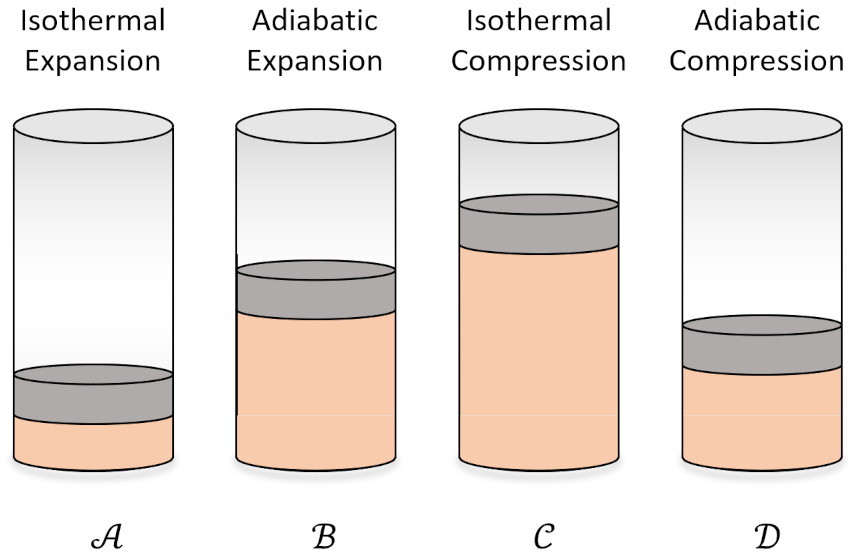

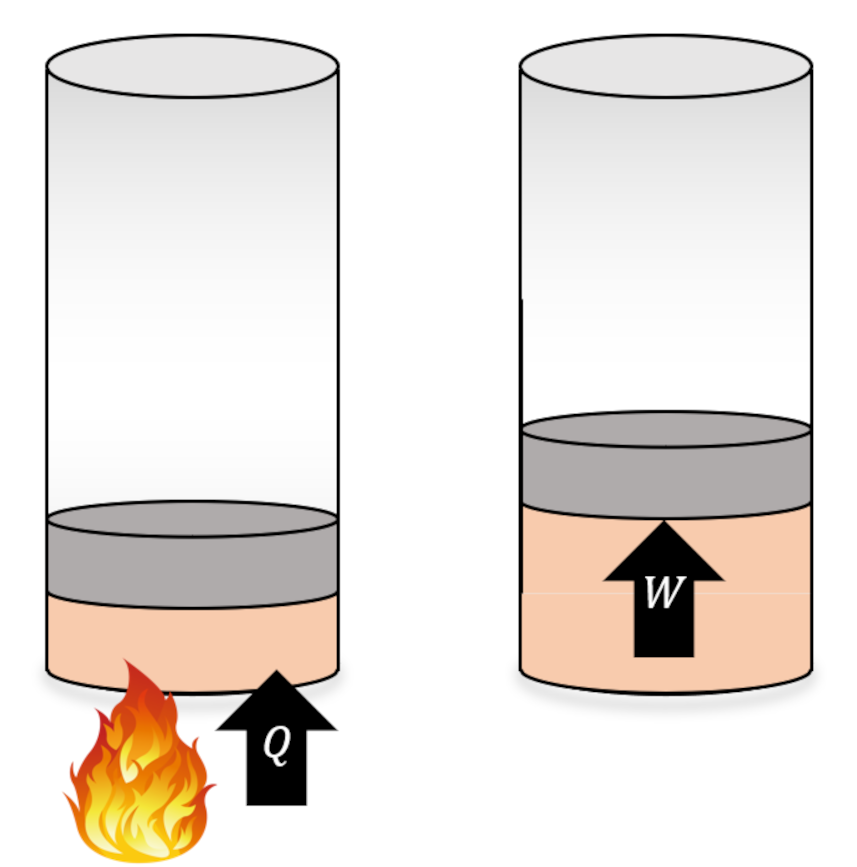

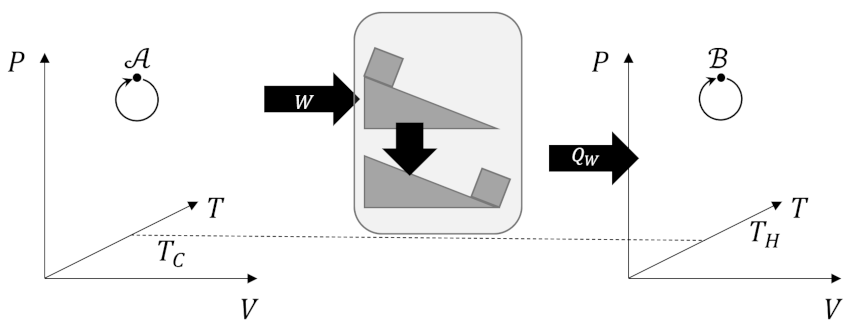

Now if the surroundings also confine the system to a constant volume $V$ (e.g., the process takes place in a pressure cooker), we know that the work performed by the system is identically zero and by the first law

\[ \Delta U = Q_{sys} \; . \]

Combining the previous two results we get that the total entropy of the universe can be expressed as

\[ \Delta S_{uni} = \Delta S_{sys} – \frac{\Delta U}{T} \; .\]

Next, we use the Helmholtz free energy

\[ F = U – TS \; .\]

as an auxiliary function for the system whose differential is

\[ dF = dU – dT S – T dS = dU- T dS \; , \]

where we used the constant temperature assumption to set $dT = 0$. Everything in this relationship can also be expressed in terms of finite changes since every term in the expression is a state variable. Now we can combine this expression with the expression relating the total entropy of the universe to the system to get

\[ \Delta F = T \Delta S – T \Delta S_{uni} – T \Delta S = -T \Delta S_{uni} \; . \]

Since $\Delta S_{uni} > 0$ must be satisfied for spontaneity, we see (after some simple rearranging) that the criterion of spontaneity for processes that take place at a constant temperature and volume is

\[ \Delta F < 0 \; \]

with no reference to anything outside the system or process of interest.

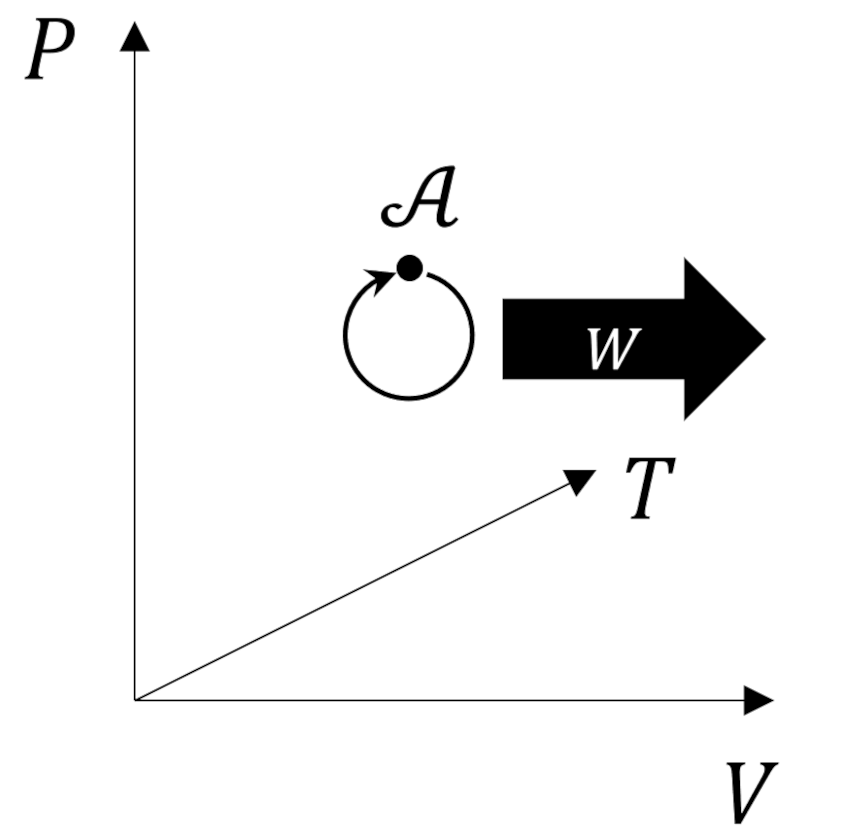

The value of the Helmholtz free energy, which is often called the Helmholtz potential and is sometimes denoted by $A$ (from the German word arbeit, which means work), represents that fraction of the store of internal energy that is available to do work.

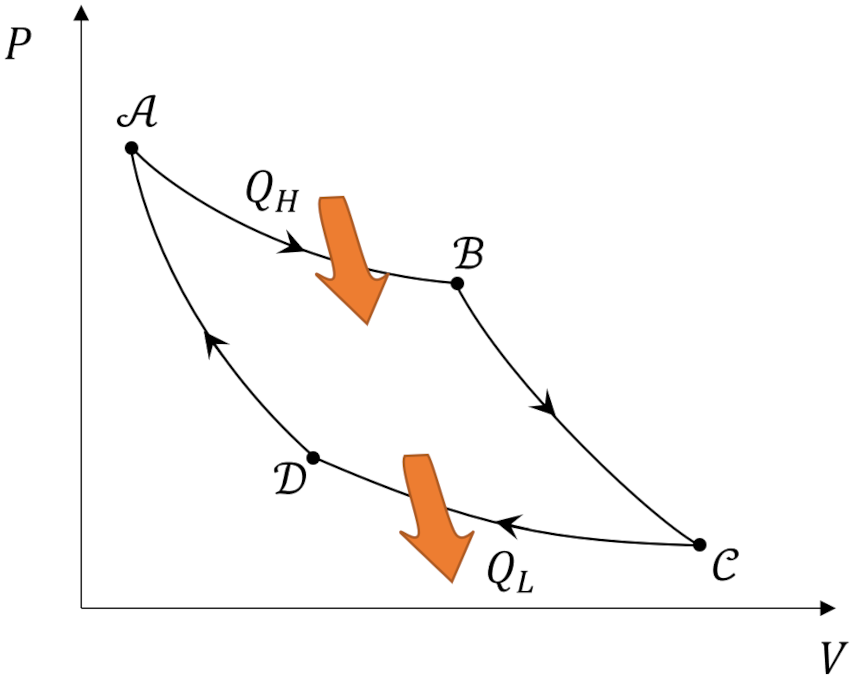

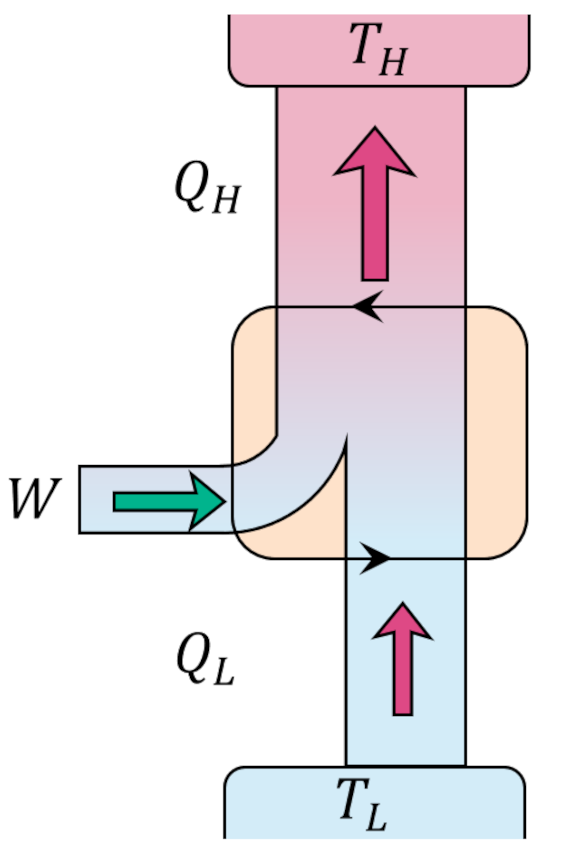

Alternatively, if the surroundings provide a constant pressure (e.g., the process takes place in a vessel open to the atmosphere), we can use the Gibbs free energy for the system

\[ G = U + PV – TS \; .\]

This case is slightly more complicated and it helps to use the definition of enthalpy $H = U + PV $ since doing so allows us to relate the system heat at constant pressure to the change in enthalpy as follows.

Take the differential/delta of the enthalpy to get

\[ \Delta H = \Delta U + P \Delta V = Q_{sys} – W + P \Delta V \; . \]

Assuming the system work in entirely mechanical expansion/compression work (i.e., $PV$ work) gives

\[ \Delta H = Q_{sys} \; .\]

This result leads to a similar equation for total entropy change

\[ \Delta S_{uni} = \Delta S_{sys} – \frac{\Delta H}{T} \; . \]

We then take the differential/delta of the Gibbs free energy

\[ \Delta G – \Delta H – T \Delta S_{sys} \; \]

and then eliminate the enthalpy change in favor of the change in the total entropy to get the criterion for spontaneous processes at constant temperature and pressure

\[ \Delta G < 0 \; .\]

The value of the Gibbs free energy, which is often called the Gibbs potential, represents that fraction of the store of internal energy that is available to do non-mechanical work.

At this point we can pause and take stock of what we did. We were able to achieve White’s vision (which, of course he achieved himself) by eliminating any notion of the universe and total entropy by the use of the Helmholtz or Gibbs free energies and by using a mixed version of the first law where heat and work lived side-by-side with the state variables. This movement between different perspectives is what makes thermodynamics so thorny for the beginning student and this discussion is particularly instructive as a result. The remaining questions about the different types of work (non-mechanical versus mechanical) will have to wait until a future blog.