One-Sided Greens Functions and Causality

This week we pick up where we left off in the last post and continue probing the structure of the one-sided Greens function $$K(t,\tau)$$. While the computations of the previous post can be found in most introductory textbooks, I would be remiss if I didn’t mention that both the previous post and this one were heavily influenced by two books: Martin Braun’s ‘Differential Equations and Their Applications’ and Larry C. Andrews’ ‘Elementary Partial Differential Equations with Boundary Value Problems’.

As a recap, we found that a second order inhomogeneous linear ordinary differential equation

\[ y”(t) + p(t) y'(t) + q(t) y(t) \equiv L[y] = f(t) \; , \]

($$y'(t) = \frac{d}{dt} y(t)$$) with boundary conditions

\[ y(t_0) = y_0 \; \; \& \; \; y'(t_0) = y_0′ \; \]

possesses the solution

\[ y(t) = A y_1(t) + B y_2(t) + y_p(t) \; ,\]

where $$y_i$$ are solutions to the homogeneous equation, $$\{A,B\}$$ are constants chosen to meet the initial conditions, and $$y_p$$ is the particular solution of the form

\[ y_p(t) = \int_{t_0}^{t} \, d\tau \, K(t,\tau) f(\tau) \; . \]

By historical convention, we call the kernel that propagates the influence of the inhomogeneous term in time (either forward or backward) a one-sided Greens function. The Wronskian provides the explicit formula

\[ K(t,\tau) = \frac{ \left| \begin{array}{cc} y_1(\tau) & y_2(\tau ) \\ y_1(t) & y_2(t) \end{array} \right| } { W[y_1,y_2](t)} = \frac{ \left| \begin{array}{cc} y_1(\tau) & y_2(\tau) \\ y_1(t) & y_2(t) \end{array} \right| } { \left| \begin{array}{cc} y_1(\tau) & y_2(\tau) \\ y_1′(\tau) & y_2′(\tau) \end{array} \right| } \; . \]

for the one-sided Greens function. Plugging $$t=t_0$$ into the particular solution, gives

\[ y_p(t_0) = \int_{t_0}^{t_0} \, d\tau \, K(t_0,\tau) f(\tau) = 0 \]

as the initial datum for $$y_p$$ and

\[ y_p'(t_0) = K(t_0,t_0) f(t_0) + \int_{t_0}^{t_0} \left. \frac{\partial}{\partial t} K(t,\tau) \right|_{t=t_0} f(\tau) = 0 \]

for the initial datum for $$y_p’$$, since the definite integral of any integrand with same lower and upper limits is identically zero and because

\[ K(t,t) = \frac{ \left| \begin{array}{cc} y_1(t) & y_2(t) \\ y_1(t) & y_2(t) \end{array} \right| } { W[y_1,y_2](t)} = 0 \; . \]

The initial conditions on the particular solution provide the justification that the constants $$\{A, B\}$$ can be chosen to meet the initial conditions or, in other words, the initial values are carried by the homogeneous solutions.

The results for the one-sided Greens function can be extended in four ways that make the practice of handling systems much more convenient.

Arbitrary Finite Dimensions

Arbitrary number of dimensions in the original differential equation are handled straightforwardly by the relation that

\[ K(t,\tau) = \frac{ \left| \begin{array}{cccc} y_1(\tau) & y_2(\tau) & \cdots & y_n(\tau) \\ y_1′(\tau) & y_2′(\tau) & \cdots & y_n'(\tau) \\ \vdots & \vdots & \ddots & \vdots \\ y_1^{(n-1)}(\tau) & y_2^{(n-1)}(\tau) & \cdots & y_n^{(n-1)} (\tau) \\ y_1(t) & y_2(t) & \cdots & y_n(t) \end{array} \right| } { W[y_1,y_2,\ldots,y_n](\tau)} \]

where the corresponding Wronskian is given by

\[ W[y_1,y_2,\cdots,y_n](\tau) = \left| \begin{array}{cccc} y_1(\tau) & y_2(\tau) & \cdots & y_n(\tau) \\ y_1′(\tau) & y_2′(\tau) & \cdots & y_n'(\tau) \\ \vdots & \vdots & \ddots & \vdots \\ y_1^{(n-1)}(\tau) & y_2^{(n-1)}(\tau) & \cdots & y_n^{(n-1)} (\tau) \\ y_1^{(n)}(\tau) & y_2^{(n)}(\tau) & \cdots & y_n^{(n)} (\tau) \end{array} \right| \]

and

\[ y^{(n)} \equiv \frac{d^n y}{d t^n} \; .\]

The generation of one-side Greens functions is then a fairly mechanical process once the homogeneous solutions are known and since we are guaranteed that the solutions for initial value problems exist and are unique, the corresponding one-sided Greens functions also exist and are unique. The following is a tabulated set of $$K(t,\tau)$$s adapted from Andrew’s book.

| Operator | \[ K(t,\tau) \] |

|---|---|

| \[ D + b\] | \[ e^{-b(t-\tau)} \] |

| \[ D^n, \; n = 2, 3, 4, \ldots \] | \[ \frac{(t-\tau)^{n-1}}{(n-1)!} \] |

| \[ D^2 + b^2 \] | \[ \frac{1}{b} \sin b(t-\tau) \] |

| \[ D^2 – b^2 \] | \[ \frac{1}{b} \sinh b(t-\tau) \] |

| \[ (D-a)(D-b), \; a \neq b \] | \[ \frac{1}{a-b} \left[ e^{a(t-\tau)} + e^{b(t-\tau)} \right] \] |

| \[ (D-a)^n, \; n = 2, 3, 4, \ldots \] | \[ \frac{(t-\tau)^{n-1}}{(n-1)!} e^{a(t-\tau)} \] |

| \[ D^2 -2 a D + a^2 + b^2 \] | \[ \frac{1}{b} e^{a(t-\tau)} \sin b (t-\tau) \] |

| \[ D^2 -2 a D + a^2 – b^2 \] | \[ \frac{1}{b} e^{a(t-\tau)} \sinh b (t-\tau) \] |

| \[ t^2 D^2 + t D – b^2 \] | \[ \frac{\tau}{2 b}\left[ \left( \frac{t}{\tau} \right)^b – \left( \frac{\tau}{t} \right)^b \right] \] |

Imposing Causality

The second extension is a little more subtle. Allow the inhomogenous term $$f(t)$$ to be a delta-function so that the differential equation becomes

\[ L[y] = \delta(t-a), \; \; y(t_0) = 0, \; \; y'(t_0) = 0 \; .\]

The particular solution

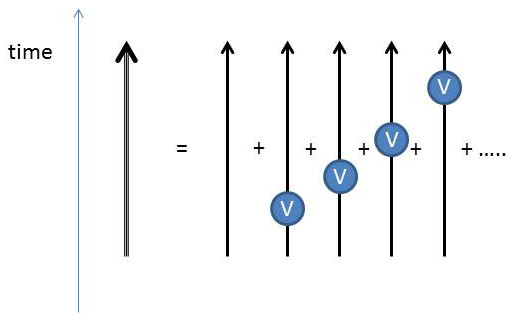

\[ y = \int_{t_0}^t \, d \tau \, K(t,\tau) \delta(\tau – a) = \left\{ \begin{array}{lc} 0, & t_0 \leq t < a \\ K(t,a), & t \geq a \end{array} \right. \] now represents how the system responds to the unit impulse delivered at time $$t=a$$ by the delta-function. The discontinuous response results from the fact that the system at $$t=a$$ receives a sharp blow that changes its evolution from the unforced evolution it was following before the impulse to a new unforced evolution with new initial conditions at $$t=a$$ that reflect the influence of the impulse. By applying a little manipulation to the right-hand side, and allowing $$t_0$$ to recede to infinity, the above result transforms into \[ K^+(t,\tau) = \left\{ \begin{array}{lc} 0, & t_0 \leq t < \tau \\ K(t,\tau), & \tau \leq t < \infty \end{array} \right. = \theta(t-\tau) K(t,\tau) \; ,\] which is a familiar result from Quantum Evolution – Part 3. In this derivation, we get an alternative and more mathematically rigorous was of understanding why Heaviside theta function (or step function, if you prefer) enforces causality. The undecorated one-sided Greens function $$K(t,\tau)$$ is a mathematical object capable of evolving the system forward or backward in time with equal facility. The one-sided retarded Greens function $$K^+(t,\tau)$$ is physically meaningful because it will not evolve the influence of an applied force to a time earlier than the force was applied.

Recasting in State Space Notation

An alternative and frequently more insightful approach to solving ordinary differential equations comes in recasting the structure into state space language, in which the differential equation(s) reduce to a set of coupled first order equations of the form

\[ \frac{d}{dt} \bar S = \bar f(\bar S; t) \]

Quantum Evolution – Part 2 presents this approach applied to the simple harmonic oscillator. The propagator (or state transition matrix or fundamental matrix) of the system contains the one-sided Greens function as the upper-right portion of its structure. It is easiest to see that result by working with a second order system with linearly-independent solutions $$y_1$$ and $$y_2$$ and initial conditions $$y(t_0) = y_0$$ and $$y'(t_0) = y’_0$$. In analogy with the previous post, the initial conditions can be solved at time $$t_0$$ to yield the expression

\[ \left[ \begin{array}{c} C_1 \\ C_2 \end{array} \right] = \frac{1}{W(t_0)} \left[ \begin{array}{cc} y_2′ & -y_2 \\ -y_1′ & y_1 \end{array} \right]_{t_0} \left[ \begin{array}{c} y_0 \\ y_0′ \end{array} \right] \equiv M_{t_0} \left[ \begin{array}{c} y_0 \\ y_0′ \end{array} \right] \; , \]

where the subscript notation $$[]_{t_0}$$ means that all of the expressions in the matrix are evaluated at time $$t_0$$. Now the arbitrary solution $$y(t)$$ to the homogeneous equation is a linear combination of the independent solutions weighted by the constants just determined

\[ \left[ \begin{array}{c} y(t) \\ y'(t) \end{array} \right] = \left[ \begin{array}{cc} y_1 & y_2 \\ y_1′ & y_2′ \end{array} \right]_{t} \left[ \begin{array}{c} C_1 \\ C_2 \end{array} \right] \equiv \Omega_{t} \left[ \begin{array}{c} C_1 \\ C_2 \end{array} \right] \equiv \Omega_{t} M_{t_0} \left[ \begin{array}{c} y_0 & y_0′ \end{array} \right] \; .\]

The propagator, which is formally defined as

\[ U(t,t_0) = \frac{\partial \bar S(t)}{\partial \bar S(t_0) } \; ,\]

is easily read off to be

which, when back-substituting the forms of $$\Omega_t$$ and $$M_{t_0}$$, gives

\[ U(t,t_0) = \frac{1}{W(t_0)} \left[ \begin{array}{cc} y_1 & y_2 \\ y_1′ & y_2′ \end{array} \right]_{t} \left[ \begin{array}{cc} y_2′ & -y_2 \\ -y_1′ & y_1 \end{array} \right]_{t_0} \; .\]

In state space notation, the inhomogeneous term takes the form $$\left[ \begin{array}{c} 0 \\ f(t) \end{array} \right]$$ and so the relative component of the matrix multiplication is the upper right element, which is

\[ \left\{ U(t,t_0) \right\}_{1,2} = \frac{y_1(t_0) y_2(t) – y_1(t) y_2(t_0)}{W(t_0)} \; , \]

which we recognize as the one-sided Greens function. Multiplication of the whole propagator by the Heaviside function yields enforces causality and gives the retarded, one-sided Greens function in the $$(1,2)$$ component.

Using the Fourier Transform

While all of the machinery discussed above is straightforward to apply, it does involve a lot of steps (e.g., finding the independent solutions, forming the Wronskian, forming the one-sided Greens function, applying causality, etc.). There is often a faster way to perform all of these steps using the Fourier transform. This will be illustrated for a simple one-dimensional problem (adapted from ‘Mathematical Tools for Physics’ by James Nearing) of a mass moving in a viscous fluid subjected to a time-varying force

\[ \frac{dv}{dt} + \beta v = f(t) \; ,\]

where $$\beta$$ is a constant characterizing the fluid and $$f(t)$$ is the force per unit mass.

We assume that the velocity has a Fourier transform

\[ v(t) = \int_{-\infty}^{\infty} d \omega \, V(\omega) e^{-i\omega t} \; \]

with the corresponding transform pair

\[ V(\omega) = \frac{1}{2 \pi} \int_{-\infty}^{\infty} dt \, v(t) e^{+i\omega t} \; .\]

Likewise, the force possesses a Fourier transform

\[ f(t) = \int_{-\infty}^{\infty} d \omega \, F(\omega) e^{-i\omega t} \; .\]

Plugging the transforms into the differential equation yields the algebraic equation

\[ -i \omega V(\omega) + \beta V(\omega) = F(\omega) \; ,\]

which is easily solved for $$V(\omega)$$ and which, when substituted back in, gives the expression for particular solution

\[ v_p(t) = i \int_{-\infty}^{\infty} d \omega \frac{F(\omega)}{\omega + i \beta} e^{-i\omega t} \; .\]

Eliminating $$F(\omega)$$ by using its transform pair, we find that

\[ v_p(t) = \frac{i}{2 \pi} \int_{-\infty}^{\infty} d\tau K(t,\tau) f(\tau) \]

with the kernel

\[ K(t,\tau) = \int_{-\infty}^{\infty} d \omega \frac{e^{-i \omega (t-\tau)}}{\omega + i \beta} \; .\]

This is exactly the form of a one-sided Greens function. Even more pleasing is the fact that when complex contour integration is used to solve the integral, we discover that causality is already built-in and that what we have obtained is actually a retarded one-side Green’s function

\[ K^+(t,\tau) = \left\{ \begin{array}{lc} 0 & t < \tau \\ -2 \pi i e^{-i \beta(t-\tau)} & t > \tau \end{array} \right. \]

Causality results since the pole of the denominator is in the lower half of the complex plane. The usual semi-circular contour used in Jordan’s lemma must be in the upper half-plane when $$t < \tau$$, in which case no poles are contained and no residue exists. When $$t > \tau$$, the semi-circle, which must be in the lower-half plane, and surrounds the pole at $$\omega = – i \beta$$, giving a non-zero residue.

The final form of the particular solution is

\[ v_p(t) = \int_{-\infty}^t d \tau e^{-\beta (t-\tau)} f(\tau) \]

which is the same result we would have received from using the one-sided Greens function for the operator $$D + \beta$$ shown in the table above.