The Wonderful Wronskian

Well, the long haul through quantum evolution is over for now, but there are a few dangling pieces of mathematical machinery that are worth examining. These pieces apply to all linear, time evolution (i.e. initial value) problems. This week I will be exploring the very useful Wronskian.

To start the exploration, we’ll consider the general form of a linear second-order ordinary differential equation with non-constant coefficients given in the usual Sturm-Liouville form. The operator $$L$$, defined as

\[ L[y](t) \equiv \frac{d^2 y}{dt^2} + p(t) \frac{dy}{dt} + q(t)y \; , \]

provides a convenient way to express the various equations, homogeneous and inhomogenous, that arise, without getting bogged down in notational minutia.

Let $$y_1(t)$$ and $$y_2(t)$$ be two solutions of the homogeneous equation $$L[y] = 0$$. Named after Jozef Wronski, the Wronskian,

\[ W[y_1,y_2](t) = y_1(t) y’_2(t) – y’_1(t) y_2(t) \; ,\]

is a function of the two solutions and their derivatives. In most contexts, we can eliminate the argument $$[y_1,y_2]$$ and simply express the Wronskian as $$W(t)$$. This simplification keeps the notation uncluttered and helps to isolate the important features.

The first amazing property of the Wronskian is that it provides a sure-fire test that the two solutions are independent. Finding independent solutions of the homogeneous equation amounts to solving the problem completely, since an arbitrary solution can always be decomposed as a linear combination of the independent solutions multiplied by the appropriate constants so that the solution satisfies the initial conditions.

The Wronskian indicates that the solutions are independent if $$W[y_1,y_2](t) \neq 0$$ for all times $$t$$. The proof follows fairly easily from an application of linear algebra in order to find the constants that meet the initial value problem. If $$y_1(t)$$ and $$y_2(t)$$ are independent solutions then $$y(t) = c_1 y_1(t) + c_2 y_2(t)$$ is the most general solution that can be constructed with the initial conditions $$y(t_0)= y_0$$ and $$y'(t_0) = y’_0$$, where the prime denotes differentiation with respect to $$t$$. Plugging $$t_0$$ into the general form yields the system of equations

\[ \left[ \begin{array}{c} c_1 y_1(t_0) + c_2 y_2(t_0) \\ c_1 y’_1(t_0) + c_2 y’_2(t_0) \end{array} \right] = \left[ \begin{array}{c} y_0 \\ y’_0 \end{array} \right] \]

which can be written in the more suggestive form

\[ \left[ \begin{array}{cc} y_1(t_0) & y_2(t_0) \\ y’_1(t_0) & y’_2(t_0) \end{array} \right] \left[ \begin{array}{c} c_1 \\ c_2 \end{array} \right] = \left[ \begin{array}{c} y_0 \\ y’_0 \end{array} \right] \; . \]

This equation only has a solution when the determinant of the matrix on the left-hand side is not equal to zero. Since the determinant of this matrix is the Wronskian, this completes the proof.

The reader might have a reasonable concern that since the Wronskian depends on time that it must be evaluated at every time in order to ensure that it doesn’t vanish and that performing this check severely limits its usefulness. Thankfully, this is not a concern since knowing the Wronskian at one time ensures that it is known at all times. The Wronskian’s equation of motion gives its time evolution and this is just the thing to see how the Wronskian’s value changes in time. Solving the Wronskian’s equation of motion starts with the observation

\[ \frac{d}{dt} W(t) = y’_1(t) y’_2(t) + y_1(t) y^{\prime \prime}_2(t) – y^{\prime \prime}_1(t) y_2(t) – y’_1(t) y’_2(t) \\ = y_1(t) y^{\prime \prime}_2(t) – y^{\prime \prime}_1(t) y_2(t) \; . \]

Now since each of the $$y_i$$ satisfy $$L[y](t) = 0$$, their second derivatives can be eliminated to yield $$y^{\prime \prime}_i = – p(t) y’_i – q(t) y_i$$. Substituting these relations in yields

\[ \frac{d}{dt} W(t) = y_1(t) \left( -p(t) y’_2 – q(t) y_2 \right) – \left( -p(t) y’_1 – q(t) y_1 \right) y_2 \\ = -p(t) \left( y_1(t) y’_2(t) – y’_1(t) y_2(t) \right) \]

Recognizing the presence of the Wronskian on the right-hand side, we find the particularly elegant equation for its evolution

\[ \frac{d}{dt} W(t) = -p(t) W(t) \]

that has solutions

\[ W(t) = W_0 \exp\left[ -\int_{t_0}^t p(t’) dt’ \right] \]

where $$W_0 \equiv W[y_1,y_2](t_0)$$. The mathematical community typically calls this result Abel’s Formula. So if the Wronskian has a non-zero value at $$t_0$$ it must have a non-zero value in the entire time span over which the operator $$L$$ is well-defined (i.e. where $$p(t)$$ or $$q(t)$$ are well-defined).

The Wronskian possesses another remarkable property. Given that we’ve found a solution to the equation $$L[y] = 0$$, the Wronskian can construct another, independent solution for us. It is rarely needed as there are easier ways to find these solutions (e.g. roots of the characteristic equation, Frobenius’s series solution, lookup tables, and the like) but it is a straightforward method that is guaranteed to work.

The construction starts with the observation that the Wronskian depends solely on the function $$p(t)$$ and not on the solutions to $$L[y] = 0$$. So once one solution is known, we can derive the differential equation satisfied by the second solution by using the known form of the Wronskian. We find the equation to be

\[ y’_2 – \frac{y’_1}{y_1} y_2 = y’_2 – \left( \frac{d}{dt} \ln(y_1) \right) y_2 = \frac{W}{y_1} \; .\]

This is just a first-order inhomogeneous differential equation that can be solved using the integrating factor

\[ \mu(t) = \frac{1}{y_1} \; . \]

\[ \frac{dy}{dt} + a(t) y = b(t) \]

and transforms the left-hand side of the differential equation into a total time derivative

\[ \frac{d}{dt} \left( \mu(t) y \right)= \mu(t) b(t) \]

provided that

\[ \frac{d \mu(t)}{d t} = a(t) \mu(t) \]

or, once integrated,

\[ \mu(t) = \exp \left( \int a(t) dt \right) \; .\]

The solution to the original first-order equation is then

\[ y = \frac{1}{\mu(t)} \int \mu(t) b(t) \; . \]

Applying this to the equation for $$y_2$$ gives

\[ y_2(t) = y_1(t) \left( \int \frac{W(t)}{y_1(t)^2} dt \right) \;. \]

The usefulness of the Wronskian doesn’t stop there. It also provides a solution to the inhomogeneous equation

\[ \frac{d^2y}{dt^2} + p(t) \frac{dy}{dt} + q(t) y = g(t) \; ,\]

through the variation of parameters approach. In analogy with the homogeneous case, define the function

\[ \phi(t) = u_1(t) y_1(t) + u_2(t) y_2(t) \; , \]

where the $$u_i(t)$$ play the role of time-varying versions of the constants $$c_i$$, subject to $$\phi(t_0) = 0$$ and $$\phi'(t_0) = 0$$, which ensures that the homogeneous solution carries the initial conditions.

Now compute the first derivative of $$\phi(t)$$ to get

\[ \frac{d \phi}{dt} = [u’_1 y_1 + u’_2 y_2] + [u_1 y’_1 + u_2 y’_2] \]

We can limit the time derivatives of the $$u_i$$ to first order if we impose the condition, called the condition of osculation, that

\[ u’_1 y_1 + u’_2 y_2 = 0 \]

since $$\phi^{\prime \prime}$$ can at best produce terms proportional to $$u_i’$$. The condition of osculation simplifies the second derivative to

\[ \frac{d^2 \phi}{dt^2} = u’_1 y’_1 + u_1 y^{\prime \prime}_1 + u’_2 y’_2 + u_2 y^{\prime \prime}_2 \; .\]

Again the second derivatives of the $$y_i$$ can be eliminated by isolating them in $$L[y_i] = 0 $$ and then substituting the results back into the equation for $$\phi^{”}$$. Doing so, we arrive at

\[ \frac{d^2 \phi}{dt^2} = u’_1 y’_1 + u_1 \left( -p(t) y’_1 – q(t) y_1 \right) + u’_2 y’_2 + u_2 \left( -p(t) y’_2 – q(t) y_2 \right) \]

which simplifies to

\[ \frac{d^2 \phi}{dt^2} = u’_1 y’_1 + u’_2 y’_2 – p(t) \phi'(t) – q(t) \phi(t) \]

(still subject to condition of osculation). Now we can evaluate $$L[\phi]$$ to find

\[ L[\phi] = u’_1 y’_1 + u’_2 y’_2 \; .\]

This relation and the condition of osculation must be solved together to yield the unknown $$u_i$$. Recasting these relations into matrix equations

\[ \left[ \begin{array}{cc} y_1 & y_2 \\ y’_1 & y’_2 \end{array} \right] \left[ \begin{array}{c} u’_1 \\ u’_2 \end{array} \right] = \left[ \begin{array}{c} 0 \\ g(t) \end{array} \right] \]

allows for a transparent solution via linear algebra. The solution presents itself immediately as

\[ \left[ \begin{array}{c} u’_1 \\ u’_2 \end{array} \right] = \frac{1}{W(t)} \left[ \begin{array}{cc} y’_2 & -y_2 \\ -y’_1 & y_1 \end{array} \right] \left[ \begin{array}{c} 0 \\ g(t) \end{array} \right] = \frac{1}{W(t)}\left[ \begin{array}{c} -y_2(t) g(t) \\ y_1(t) g(t) \end{array} \right] \]

Since these equations are first order, a simple integration yields

\[ \left[ \begin{array}{c} u_1(t) \\ u_2(t) \end{array} \right] = \int_{t_0}^t d\tau \, \frac{1}{W(\tau)} \left[ \begin{array}{c} -y_2(\tau) \\ y_1(\tau) \end{array} \right] g(\tau) \; .\]

The full solution is written as

\[ \phi(t) = \int_{t_0}^t d\tau \frac{1}{W(\tau)} \left( -y_1(t) y_2(\tau) + y_2(\tau) y_1(\tau) \right) g(\tau) \; ,\]

which condenses nicely into

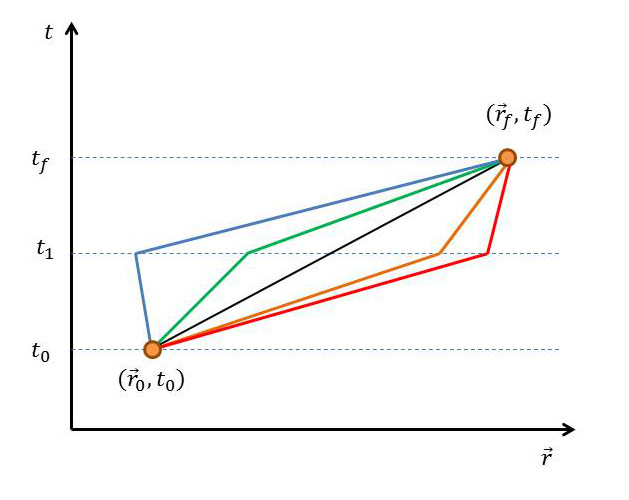

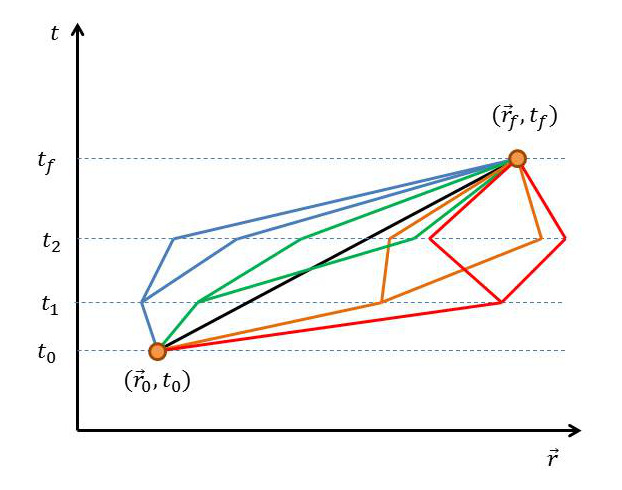

\[ \phi(t) = \int_{t_0}^t d\tau \, K(t,\tau) g(\tau) \; , \]

with

\[ K(t,\tau) = \frac{ -y_1(t) y_2(\tau) + y_1(\tau) y_2(t) }{W(\tau)} = \frac{ \left| \begin{array}{cc} y_1(\tau) & y_2(\tau) \\ y_1(t) & y_2(t) \end{array} \right|}{W(\tau)} \; .\]

The Wronskian is not limited to second-order equations and extensions to higher dimensions are relatively easy. For example, the equation

\[ y^{\prime \prime \prime} + a_2(t) y^{\prime \prime} + a_1(t) y’ + a_0 y = f(t) \]

has a Wronskian defined by

\[ W(t) = \left| \begin{array}{ccc} y_1 & y_2 & y_3 \\ y’_1 & y’_2 & y’_3 \\ y_1^{\prime \prime} & y_2^{\prime \prime} & y_3^{\prime \prime} \end{array} \right| \]

with the corresponding kernel for solving the inhomogeneous equation

\[ K(t,\tau) = \frac{\left| \begin{array}{ccc} y_1(\tau) & y_2(\tau) & y_3(\tau) \\ y’_1(\tau) & y’_2(\tau) & y’_3(\tau) \\ y_1(t) & y_2(t) & y_3(t) \end{array} \right|}{W(\tau)} \]

and with a corresponding equation of motion

\[ \frac{d}{dt} W(t) = -a_2(t) W(t) \; .\]

The steps to confirm these results follow in analogy with what was presented above. In other words, solving the homogeneous equation for the initial conditions gives the form of the Wronskian as a determinant and the variation of parameters method gives the kernel. The verification Abel’s formula follows from the recognition that when computing the derivative of a determinant, one first applies the product rule to produce 3 separate terms (one for each row) and that only the one with a derivative acting on the last row survives. Substitution using the original equation then leads to the Wronskians evolution only being dependent on the coefficient multiplying the second highest derivative (i.e. $$n-1$$). Generalizations to even higher dimensional systems are done the same way.

The expression $$K(t,\tau)$$ is called a one-sided Greens function and a study of it will be the subject of next week’s entry.