Vectors and Forms: Part 1 – The Basics

I thought it might be nice to have a change of pace in this column and discuss some differential geometry and the relationship between classical vector analysis and the calculus of differential forms. In large measure, this dabbling is inspired by the article Differential forms as a basis for vector analysis – with applications to electrodynamics by Nathan Schleifer which appeared in the American Journal of Physics 51, 1139 (1983).

While I am not going to go into as much detail in this post as Schleifer did in his article, I do want to capture the flavor of what he was trying to do and set the groundwork for following posts on proving some of the many vector identities that he tackles (and perhaps a few that he doesn’t). At the botttom of all these endeavors is the pervading similarity of all of the various integral theorems of vector calculus.

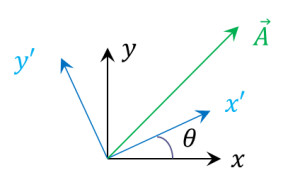

The basis premise of the operation is to put into a one-to-one correspondence a classical vector expressed as

\[ \vec A = A_x \hat e_x + A_y \hat e_y + A_z \hat e_z \]

with an associated differential one-form

\[ \phi_{A} = A_x dx + A_y dy + A_z dz \; .\]

The elements of both descriptions form a vector space; that is to say that the 10 axioms of a vector space are satisfied by the objects in $$\{dx, dy, dz\}$$ just as they are satisfied by $$\{\hat e_x , \hat e_y, \hat e_z\}$$.

If decomposition into components were all that was required, the two notations would offer the same benefits with the only difference being the superficial differences in the glyphs of one set compared to another.

However, we want more out of our sets of objects. Exactly what additional structure each set caries and how it is notationally encoded is the major difference between the two approaches.

Typically, classical vectors are given a metric structure such that

\[ \hat e_i \cdot \hat e_j = \delta_{ij} \]

and a ‘cross’ structure such that

\[ \hat e_i \times \hat e_j = \epsilon_{ijk} e_k \; .\]

Differential one-forms differ in that they are given only the ‘cross’ structure in the form

\[ dy \wedge dz = – dz \wedge dy \; ,\]

\[ dz \wedge dx = – dx \wedge dz \; ,\]

\[ dx \wedge dy = – dy \wedge dx \; ,\]

where the wedge product $$dx \wedge dy$$ is sensibly called a two-form, indicating that it is made up of two one-forms.

Like the vector cross-product, the wedge product anti-commutes, implying that

\[ dx \wedge dx = 0 \; ,\]

with an analogous formula for $$dy$$ and $$dz$$.

Despite these similarities, the wedge product differs in one significant way from its vector cross-product cousin in that it is also associative so that no ambiguity exists by saying

\[ dx \wedge (dy \wedge dz) = (dx \wedge dy) \wedge dz = dx \wedge dy \wedge dz \; .\]

These algebraic rules are meant to sharply distinguish a point that is lost in classical vector analysis – namely that a vector defined in terms of a cross-product (i.e $$\vec C = \vec A \times \vec B$$) is not really a vector of the same type as the two vectors on the right-hand side that define it. That this must be true is easily (if somewhat startlingly) seen by the fact that when we consider the transformation of coordinates, the right-hand side needs two factors (one for $$\vec A$$ and one for $$\vec B$$) while the left-hand side needs only one. From a geometric point-of-view, we are exploiting a natural association between a plane spanned by $$\vec A$$ and $$\vec B$$ and the unique vector (up to a sign) that is perpendicular to it. This later obsservation is fully encoded into the structure of differential forms by defining the Hodge star operator such that

\[ dy \wedge dz \equiv *dx \; ,\]

\[ dz \wedge dx \equiv *dy \; ,\]

\[ dx \wedge dy \equiv *dz \; .\]

Also note that the Hodge star can be toggeled in the sense that

\[ *(dy \wedge dz) = **dx = dx \; ,\]

with similar expressions for the other two combinations.

Since the wedge product is associative, the Hodge star can be extended to operate on a three-form to give

\[ *(dx \wedge dy \wedge dz) = 1 \; , \]

which is interpreted as associating volumes with scalars.

All the tools are in place to follow the program of Schleifer for classical vector analysis. Note: some of these tools need to be modified for either higher dimensional spaces, or for non-positive-definite spaces, or both.

At this point, there may be some worry expressed that since the formalism of differential forms has no imposed metric structure, that we’ve thrown away the dot-product and all its useful properties. That this isn’t a problem can be seen as follows.

The classic dot-product is given by

\[ \vec A \cdot \vec B = A_x B_x + A_y B_y + A_z B_z \; .\]

The equivalent expression, in terms of forms, is

\[ \vec A \cdot \vec B \Leftrightarrow * ( \phi_{A} \wedge *\phi_B )\]

as can be seen as follows:

\[ \phi_B = B_x dx + B_y dy + B_z dz \; , \]

from which we get

\[ *\phi_B = B_x dy \wedge dz + B_y dz \wedge dx + B_z dx \wedge dy \; .\]

Now multiplying, from the left, by $$\phi_A$$ will lead to 9 products, each resulting in a three-form. However, due to the properties of the wedge product, only those products with a single $$dx$$, $$dy$$, and $$dz$$ will survive. These hardy terms are

\[ \phi_A \wedge *\phi_B = A_x B_x dx \wedge dy \wedge dz \\ + A_y B_y dy \wedge dz \wedge dx \\ + A_z B_z dz \wedge dx \wedge dy \; .\]

Accounting for the cyclic permutation of the wedge product this relation can be re-written as

\[ \phi_A \wedge *\phi_B = (A_x B_x + A_y B_y + A_z B_z) dx \wedge dy \wedge dz \; .\]

Applying the Hodge star operator immediately leads to

\[ * (\phi_A \wedge * \phi_B)) = A_x B_x + A_y B_y + A_z B_z \; . \]

In a similar fashion, the cross-product is seen to be

\[ \vec A \times \vec B \Leftrightarrow *(\phi_A \wedge \phi_B) \; .\]

The proof of this follows from

\[ \phi_A \wedge \phi_B = (A_x dx + A_y dy + A_z dz ) \wedge (B_x dx + B_y dy + B_z dz) \; ,\]

which expands to

\[ \phi_A \wedge \phi_B = (A_x B_y – A_y B_x) dx \wedge dy \\ + (A_y B_z – A_z B_y) dy \wedge dz \\ + (A_z B_x – A_x B_z) dz \wedge dx \; .\]

Applying the Hodge star operator gives

\[ *(\phi_A \wedge \phi_B) = (A_x B_y – A_y B_x) dz \\ + (A_y B_z – A_z B_y) dx \\ + (A_z B_x – A_x B_z) dy \equiv \phi_C \; ,\]

which gives an associated vector $$\vec C$$ of

\[ \phi_C \Leftrightarrow \vec C = (A_y B_z – A_z B_y) \hat e_x \\ + (A_z B_x – A_x B_z) \hat e_y \\ + (A_x B_y – A_y B_x) \hat e_z \; .\]

Next week, I’ll extend these results to include the classical vector analysis by use of the exterior derivative.