One of the more fun things to do with physical models is to mine general results without actually solving specific problems. It’s fun because it is relatively easy to do and gives some global insight into the problem that is not easily obtainable from examining individual cases. Usually, this type of data mining comes from considerations of symmetry or general principles and takes the form of clever manipulations of the underlying equations. Like any good riddle, the joy is in seeing the solution – the frustration is in trying to find it for the first time.

Of course, to find these nuggets of general fact, one needs to know, or at least firmly believe, they are there. Conservation laws for energy, momentum, and angular momentum are cherished principles that took many decades to be ferreted out of many specific cases.

Nonetheless, we can all stand on the shoulders of giants and enjoy the view and that is what this post is all about. Standing on the shoulders of Maxwell, one can mine the equations that bear his name for all sorts of nuggets.

The version of Maxwell’s equations that I’ll use describes the behavior of the macroscopic fields with the appropriate constitutive equations. These are:

\[ \nabla \times \vec H = \vec J + \frac{\partial \vec D}{\partial t} \; , \]

\[ \nabla \times \vec E = -\frac{\partial \vec B}{\partial t} \; ,\]

\[ \nabla \cdot \vec D = \rho \; ,\]

and

\[ \nabla \cdot \vec B = 0 \; , \]

as the differential expressions for Ampere’s, Faraday’s, Coulomb’s and the Monopole’s laws.

Since dimensional analysis will be important below, note that fields and sources have units of

- $$\vec E$$ – $$[N/C]$$ or $$[V/m]$$

- $$\vec D$$ – $$[C/m^2]$$

- $$\vec B$$ – $$[Ns/Cm]$$ or $$[kg/Cs]$$

- $$\vec H$$ – $$[A/m]$$

- $$\vec J$$ – $$[C/m^2/s]$$

- $$\rho$$ – $$[C/m^3]$$

- $$\epsilon$$ – $$[C^2/Nm]$$

- $$\mu$$ – $$[N/A^2]$$

There are three very nice interrogations of these laws that yield: 1) charge conservation, 2) energy and momentum conservation (in the absence of external forces), and 3) the guarantee that a solution to the initial value problem (Coulomb and monopole equations) is preserved by the propagation of the system forward in time. This latter property will be called constraint preservation.

Charge Conservation

As they appear in their usual form, the Maxwell equations don’t immediately show that they honor the conservation of charge. That they actually do is seen by first starting with Ampere’s law and take the divergence of both sides to get

\[ \nabla \cdot \left( \nabla \times \vec H = \vec J + \frac{\partial \vec D}{\partial t} \right ) \Rightarrow \nabla \cdot \nabla \times \vec H = \nabla \cdot \vec J + \nabla \cdot \frac{\partial \vec D}{\partial t} \; . \]

Since the divergence of a curl is zero, the left-hand side disappears. Next interchange of the divergence operator with the partial derivative with respect to time, $$\partial_t$$ to get

\[ 0 = \nabla \cdot \vec J + \frac{\partial ( \nabla \cdot \vec D )}{\partial t} \; . \]

Now the last term on the right-hand side can be put into familiar form by using Coulomb’s law and, with one more minor manipulation, yields the familiar form of the charge conservation law

\[ \nabla \cdot \vec J + \frac{\partial \rho}{\partial t} = 0 \; .\]

Energy and Momentum Conservation

The starting point for this conservation is the proof of the Poynting theorem. Form the dot product between $$\vec H$$ and both sides of Faraday’s law to get

\[ \vec H \cdot \nabla \times \vec E = – \vec H \cdot \partial_t \vec B \]

Rewrite the left-hand side using the vector identity

\[ \nabla \cdot (\vec A \times \vec B) = (\nabla \times \vec A) \cdot \vec B – \vec A \cdot (\nabla \times \vec B) \]

giving

\[ \nabla( \vec E \times \vec H ) + \vec E \times (\nabla \times \vec H) = – \vec H \cdot \partial_t \vec B \]

Now use Ampere’s law to substitute for $$\nabla \times H $$, yielding

\[ \nabla( \vec E \times \vec H ) + \vec E \times (\vec J + \partial_t \vec D) = – \vec H \cdot \partial_t \vec B \]

which, when rearranged, gives the Poynting theorem

\[ \nabla \cdot (\vec E \times \vec H ) + \vec H \cdot \partial_t \vec B + \vec E \cdot \partial_t \vec D = – \vec E \cdot \vec J \]

Make the following identifications

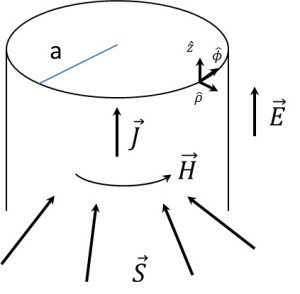

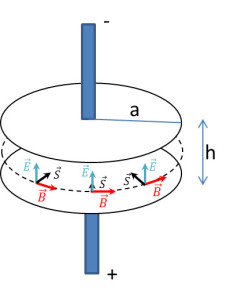

\[ \vec S = \vec E \times \vec H \]

and

\[ u = \frac{1}{2} \left( \vec E \cdot \vec D + \vec H \cdot \vec B \right) \]

to get the common form of

\[ \nabla \cdot \vec S + \partial_t u = – \vec E \cdot \vec J \]

for Poynting’s theorem.

Now for some dimensional analysis. The quantities $$u$$, $$\vec S$$, $$\vec E \cdot \vec J$$, $$\nabla \cdot \vec S$$, and $$\partial_t u$$ have units of:

- $$u$$ – $$[N/C][C/m^2] + [Ns/Cm][C/sm]=[N/m^2] = [J/m^3]$$

- $$\vec S$$ – $$[N/C][C/sm] = [N/ms] = [Nm/m^2s] = [J/m^2s]$$

- $$\vec E \cdot \vec J$$ – $$[N/C][C/m^2s]=[Nm/m^3s] = [J/m^3s]$$

- $$\nabla \cdot \vec S $$ – $$[N/m^2s] = [Nm/m^3s] = [J/m^3s]$$

- $$\partial_t u$$ – $$[J/m^3][1/s] = [J/m^3s]$$

The obvious interpretation is that $$u$$ is an energy density, $$\vec S$$ is the energy flow (flux) and the $$\vec E \cdot \vec J$$ is the work done on the charges by the electric field per unit volume (power density).

There is a way to connect the Poynting theorem to momentum since photons obey $$E^2 = c^2 \vec p^2$$. For simplicity, the expression of the Poynting vector in free space and in terms of the magnetic permeability $$\mu_0$$ and the magnetic flux density $$\vec B$$ is

\[ \vec S = \frac{1}{\mu_0} \vec E \times \vec B \; .\]

A check of the Poynting vector units from the definition to find $$\underbrace{ [A^2/N] }_{1/\mu_0} \underbrace{ [N/C] }_{\vec E} \underbrace{ [Ns/Cm] }_{\vec B} = [N/ms]$$. Now these unit can be put into a more suggestive form as either $$[N/ms] = [Nm/m^3][m/s] = [J/m^3][m/s]$$, which suggests interpreting the Poynting vector as a energy density times a speed or as $$[N/ms] = [Nm][1/m^2/s] = [J/m^2/s] = [J/s/m^2] = [W/m^2]$$, which represents a power flux.

So dividing by the speed of light, in the appropriate form, gives

\[ \frac{1}{c} \vec S = \sqrt{ \frac{\epsilon_0}{\mu_0} } \vec E \times \vec B = \sqrt{ \epsilon_0 \mu_0 } \vec E \times \vec H \]

which represents either a momentum flux or a momentum density times speed. Since $$\vec S/c$$ has units of momentum density times speed one may guess that $$\vec S/c^2$$ is the field momentum density. This is true even in the presence of matter allowing the general definition of

\[ \frac{1}{c^2} \vec S = \underbrace{ \mu \epsilon \vec E \times \vec H}_{\text{field momentum density}} \; .\]

Frankl, in his Electromagnetic Theory (pp. 203-4), presents a nice argument, based on the Lorentz force law, to relate the field momentum to the particle momentum to show that

\[ \vec F_{total} = \frac{d}{dt}\left[ \vec p_{particles} + \int d^3 r \, \mu \epsilon \vec E \times \vec H \right] \; .\]

To see this, start with the Lorentz force law ($$\vec F = q (\vec E + \vec v \times \vec B)$$) expressed in terms of particle and field densities using Ampere’s and Coloumb’s laws

\[ \vec f = \rho \vec E + \vec J \times \vec B = (\nabla \cdot \vec D) \vec E – \vec B \times (\nabla \times \vec H) – \partial_t \vec D \times \vec B \; .\]

Using the Faraday and Monopole laws gives

\[ 0 = – \vec D \times (\nabla \times \vec E) – \vec D \times \partial_t \vec B \]

and

\[ 0 = – \vec H \, \nabla \cdot \vec B \; .\]

Adding the above relations to the Lorentz force law relation gives

\[ \vec f = \epsilon ( \vec E \, \nabla \cdot \vec E – \vec E \times (\nabla \times \vec E) ) + \mu ( \vec H \, \nabla \cdot \vec H – \vec H \times ( \nabla \times \vec H ) ) – \mu \epsilon \, \partial_t ( \vec E \times \vec H ) \; .\]

Integrate over all space using the identity

\[ \int d^3r \, (\vec G \nabla \cdot \vec G – \vec G \times \nabla \times \vec G) = \int d^2S \left( \vec G (\vec G \cdot \hat n) – \frac{1}{2} G^2 \hat n \right) \]

that allows two of the volume integrals to become surface integrals resulting in

\[ \underbrace{ \frac{d}{dt} \vec p_{particles} }_{\vec F_{particles}} = I_{\vec E} + I_{\vec H} – \frac{d}{dt} \int d^3r \, \mu \epsilon \vec E \times \vec H \; .\]

Finally, Frankl attaches meaning to the surface integrals by first looking at the situation where the fields are static to pick out the total force as

\[ \frac{d}{dt} \vec p_{particles} = \underbrace{ I_{\vec E} + I_{\vec H} }_{\text{total force}} \; .\]

Since the instantaneous configuration of the fields and the particles cannot ‘know’ the future, $$I_{\vec E} + I_{\vec H}$$ must always be the total force. Bring the field piece over to the left hand side gives

\[ \frac{d}{dt} \vec p_{particles} + \frac{d}{dt} \int d^3r \, \mu \epsilon \vec E \times \vec H = \underbrace{ I_{\vec E} + I_{\vec H} }_{\text{total force}} \; , \]

which suggests the identification of the field momentum as

\[ \vec p_{field} = \int d^3r \, \mu \epsilon \vec E \times \vec H \; ,\]

which is exactly the interpretation obtained above for the Poynting vector divided by the speed of light squared.

Now if the system is defined in such a way to be closed so that the electric and magnetic fields are zero on the boundary, then momentum and energy cannot flow out of the system and thus are conserved. Energy and momentum do flow between the particles and the fields, with one component gaining or losing as the other loses or gains, but never does any of the energy or momentum vanish.

Constraint Preservation

The two Maxwell equations involving the curl operator are dynamical – that is to say that the spatial derivatives are tied to derivatives with respect to time. The two Maxwell equations involving the divergence operator involve no time derivatives. This latter two are interpreted as constraint equations that the field must satisfy at each time step. The operative question is if the fields satisfy these constraints at some initial time, if the subsequent propagation of the field forward in time, described by the dynamical equations, really honors the constraints. That is to say, that the constraints will continue to be satisfied. This is a very important point, as any theory that fails to keep the constraints satisfied is physically untenable. To check whether Maxwell’s equations do preserve these constraints, start by taking the divergence of Ampere’s equation as in the charge conservation case above

\[ \nabla \cdot \vec J + \partial_{t} (\nabla \cdot \vec D ) = 0 \; .\]

This time, instead of hunting for charge conservation, we are going to use charge conservation to eliminate $$div(\vec J)$$ in favor of $$\partial_t \rho$$ to get

\[ -\partial_t \rho + \partial_t (\nabla \cdot \vec D) = 0 \Rightarrow \partial_t (\nabla \cdot \vec D – \rho ) = 0 \; .\]

Note that a logical purist may object that this constraint and charge conservation are really not independent of each other and that this ‘proof’ is merely a tautology. This is actually true but is not a flaw. It simply means that charge conservation and the preservation of the Coulomb go hand-in-hand.

Likewise, taking the divergence of Faraday’s law gives

\[ \nabla \cdot (\nabla \times \vec E + \partial_t \vec B = 0 ) \; , \]

which because of the divergence of the curl is zero becomes

\[ \partial_t (\nabla \cdot \vec B = 0 ) = 0 \; ,\]

assuming that $$\partial_t$$ commutes with $$\nabla \cdot$$.

Thus, once both Coulomb’s Law and the Monopole Law are satisfied at at the initial conditions, they stay satisfied for all time.